Quick Summary

🆎 Disciplined A/B Testing for Email Campaigns replaces guesswork with proof, aligning subject lines, creative, and offers to a single winning metric that compounds opens, clicks, and conversions over time.

📋 Small lists can still win with email a/b testing by using 50/50 splits, longer durations, and focusing on high-impact variables while aggregating results across comparable broadcasts or flows for stronger confidence.

📱 Choose test parameters deliberately, targeting 95 percent confidence, testing 10 to 50 percent per variant, and running 1 to 7 days for campaigns or 1 to 30 days for flows to ensure reliable results.

📊 Flow-level email split testing unlocks continuous optimization by comparing action-level emails or entire sequences, applying tests only to new entries, and automatically deploying winners to deliver compounding performance gains.

💹 AI in email A/B testing speeds variant creation, predicts send times, scores subject lines, detects anomalies, and accelerates winner selection, helping e-commerce teams drive more revenue with less manual effort.

If your email performance swings from “great” to “meh” with no clear explanation, you’re leaving revenue to chance. A/B Testing for Email Campaigns replaces guesswork with proof, so you send more winners and fewer misses.

Underperforming broadcasts waste send volume and frustrate teams. Short, inconclusive tests muddy decisions. Manual analysis slows everything down.

Thoughtful, automated email split testing fixes each of these pain points with clear goals, disciplined design, and fast winner selection.

For e-commerce brands, especially those with compact lists, the right approach to email A/B testing turns every send into a learning engine.

The result is compounding gains on opens, clicks, and sales across campaigns and flows.

What A/B Testing In Email Marketing Means (And Why It Matters For Small E-commerce Lists)

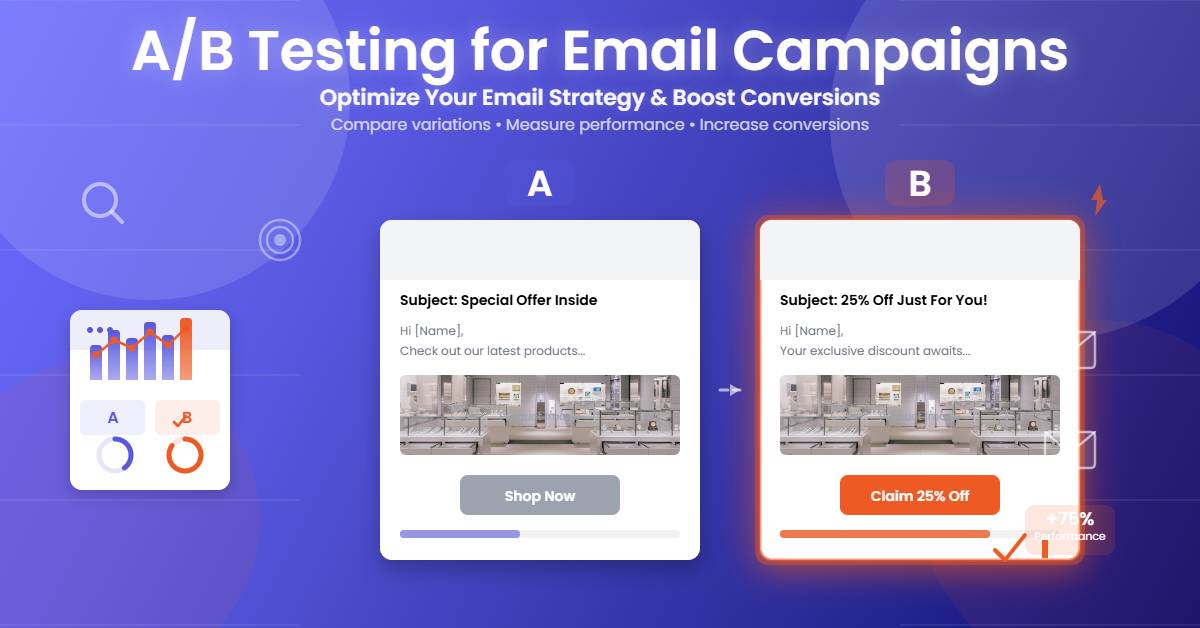

A/B testing in email marketing is straightforward. You create two or more variants of an email, split a defined portion of your audience, and measure which variant performs better based on a winning metric. A/B testing in email marketing is used to increase open rates, improve click engagement, and drive conversions, while revealing what resonates with your customers.

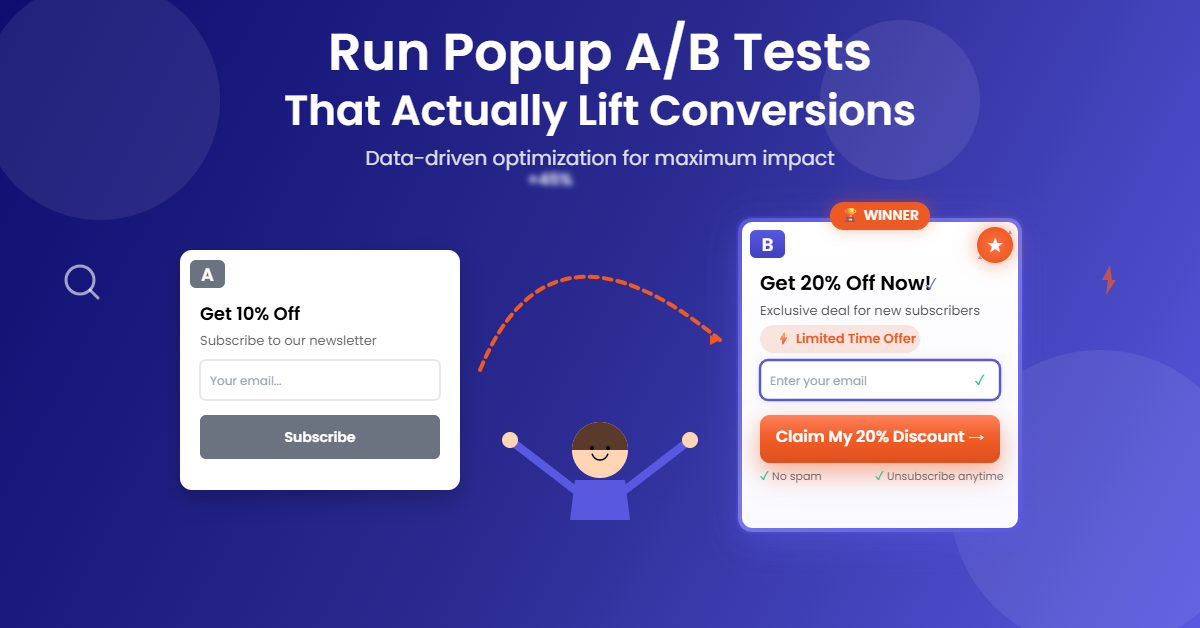

For small e-commerce lists, this matters even more. Each send carries more weight, early mistakes are costly, and “statistical significance” can feel out of reach. You can still run meaningful a/b testing email marketing campaigns for small businesses by using 50/50 splits, allowing longer test windows, aggregating learnings across similar sends, and focusing on high-impact variables. With a disciplined setup and the right automation, a/b testing in email marketing helps in making every send smarter than the last.

What To Test First For Quick, Compounding Wins

Start where the impact is immediate and measurable. Subject lines influence opens; creative and calls-to-action influence clicks; offers influence conversions. Prioritize variables based on your goal and the stage of the journey.

Subject lines and preview text deserve early attention because they set inbox expectations and act as your first conversion. Try curiosity versus clarity, benefit-led hooks, and brand name inclusion. Including preview text tests that complement the subject line often lifts open rate.

CTAs and hero images shape click behavior. Test button copy that emphasizes outcomes over actions, contrast-rich button colors, and hero images featuring lifestyle versus product-in-focus. A b testing email marketing examples include “Shop the Drop” versus “Get 15% Off Today,” or a lifestyle hero versus a product grid.

Offers and incentives directly impact revenue. Compare free shipping versus percentage-off, dollar-off thresholds, and short-window flash deals versus evergreen perks. These a/b email testing ideas often yield the largest revenue-per-recipient gains.

Send times and delivery cadence still move the needle, particularly for time-sensitive offers. Predictive timing can outperform standard best practices, especially when layered with segmentation.

Lifecycle stage tests uncover bigger flow-level wins. Compare post-purchase product education against cross-sell offers, or win-back incentives against content-led re-engagement. These a/b testing email examples show how journey context can outperform one-size-fits-all messages.

How To Do Email A/B Testing Step-By-Step

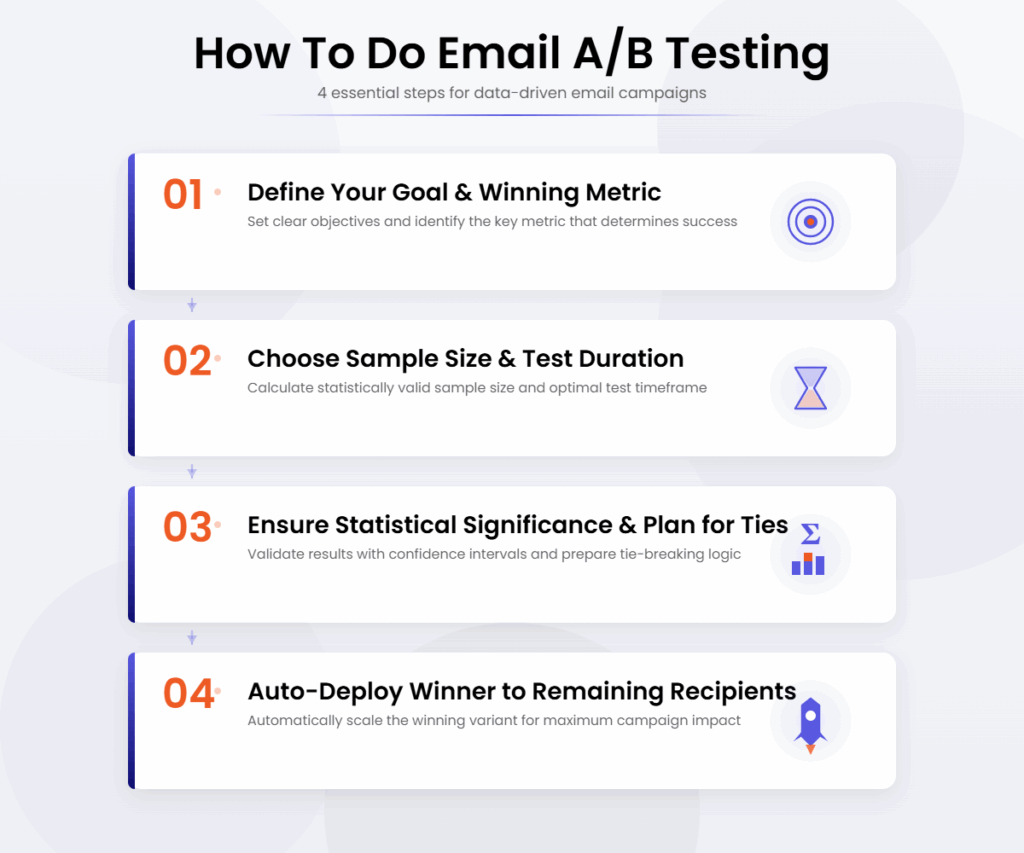

Define your goal and winning metric

Every test should lead to a single, primary objective. If you’re testing subject lines, choose open rate. If you’re testing creative or CTAs, choose click rate or click-to-open rate. If you’re testing offers or pricing, choose conversion rate or revenue per recipient. This clarity anchors your test design, your analysis, and your rollout plan.

Choose sample size and test duration

For broadcasts, test 10 to 50% of your audience per variant and run the test for 1 hour to 7 days, depending on engagement velocity and list behavior. Platforms like TargetBay Email & SMS support configuring test duration and sample size within these bounds and will guard against extremes by enforcing ranges such as 10 to 90% and requiring an adequate minimum recipient count for automated significance checks.

For flows, allow 1 to 30 days to collect enough entries. The distribution can be 50/50 for speed or skewed to protect risk while gathering data. In TargetBay flows, tests apply to new entries only, ensuring current customers already mid-journey are unaffected.

If your broadcast list is below automated thresholds, use a 50/50 split and evaluate results over multiple comparable sends. This approach compounds evidence and makes a/b testing email marketing practical for smaller lists.

Ensure statistical significance and plan for ties

Aim for 95% confidence before declaring a winner. If the result is not significant by the end of the test window, keep the control, extend the test, or re-run with a larger sample. When there is no clear winner, TargetBay can automatically continue with the control for the remaining recipients, preventing risky rollouts while preserving learning momentum.

Predefine stop rules to avoid “peeking” at results and stopping early. Waiting for your planned duration prevents false positives and helps you trust the data.

Auto-deploy the winner to the remaining recipients

The biggest speed unlock is automated winner selection and sending. After the test period, the winning variant should auto-deploy to the rest of your list, using the configured metric. This reduces manual effort, locks in performance gains, and keeps your campaign calendar on time. In TargetBay Email & SMS, winner calculation is fast, auditable, and based on mathematically sound significance checks.

Email Split Testing Inside Automated Flows

Flow A/B testing is where compounding value really takes off because every new entrant benefits from better-performing experiences. Action-level testing compares variants of a single email, such as different subject lines, hero images, or CTAs within a welcome or abandoned cart email. Full-sequence testing compares entire flow paths, such as a three-step win-back with urgency versus a three-step content-first re-engagement track.

Set distribution percentages that total 100% and choose your winning metric based on the flow’s purpose. A welcome series might optimize click-to-open rate to encourage browsing, while a win-back flow might favor conversion rate or revenue per recipient. Give flow tests 1 to 30 days to accumulate participants, and require 95% confidence before rolling changes into production. If there is no clear winner when the test ends, continue using the first variant and collect more data.

Most importantly, ensure flow tests only affect new entries. Existing customers already in the journey should not be rerouted midstream. TargetBay flows respect this rule by design, preserving a clean experiment and a consistent customer experience.

A/B Testing Email Best Practices That Save Time And Boost Confidence

Test one major variable at a time. When you change both the offer and the hero image, you learn less about what actually drove the lift. Isolate meaningful changes so your insights are reusable later.

Avoid peeking and stick to your preplanned duration. Stopping early because a variant looks promising can create false confidence. Discipline is especially critical when your audience is modest and early data is noisy.

Maintain list hygiene and consistent segments. Clean, engaged lists deliver more trustworthy results. Testing a variant on a cleaner segment and another on a mixed segment can produce misleading conclusions.

Use control groups and document learnings. Keeping control lets you see the true lift of your changes. Build an internal library of what worked, what didn’t, and in which contexts, so you avoid re-testing settled questions.

Resist over-optimization on vanity metrics. Open rate is great for subject line tests, but if your goal is sales, keep sight of conversion rate and revenue per recipient. Align metric choice with business outcomes.

A/B Email Testing Ideas And Examples For Small Businesses

Discount framing often changes perceived value. Test “$10 off” against “10% off,” especially around common order values. Shoppers respond differently depending on cart size and category, and the psychology of round numbers can surprise you.

Free shipping versus percentage-off is a classic, high-impact test. Many small stores see stronger conversion with free shipping because it removes a known friction at checkout. When margins allow, test hybrid offers that pair a small discount with free shipping and measure revenue per recipient.

Scarcity and urgency copy can lift click-through without feeling pushy. Try a gentle “selling fast” message against “ends tonight” language and monitor both clicks and spam complaints to keep the experience brand-safe.

Social proof placement near the CTA often increases clicks. If you use TargetBay Reviews, test star ratings under the hero image versus testimonial snippets above the primary CTA. This is a simple test with an immediate signal and minimal creative effort.

Mobile-first design changes are low-lift and high-reward. Test a single stacked column layout with larger tap targets against a two-column grid. Many small brands see improved click-to-open rate when mobile readability is prioritized.

AI In Email A/B Testing For Speed And Scale

AI in email A/B testing accelerates each phase of the workflow without replacing your judgment. Use AI to generate on-brand subject line variants, compressing the brainstorming cycle from hours to minutes. TargetBay’s AI email agent can produce multiple options aligned to your tone and product context, then score them for likely opens.

Predictive send times use engagement history to time delivery when each subscriber is most likely to open, outperforming static send windows. Subject line scoring and language analysis surface which phrasing patterns resonate most with your audience, helping you design stronger tests over time.

Anomaly detection flags outliers and underperformance early, protecting brand reputation and list health. Faster winner selection uses sound significance checks to auto-deploy high performers, reducing manual steps and ensuring you capitalize on uplift while it’s still relevant.

Together, these capabilities make a/b testing for email marketing more reliable and less labor-intensive, especially for busy teams managing many SKUs, seasons, and promotions.

Conclusion

Winning with email is a process, not a lucky streak. With A/B Testing for Email Campaigns, you define the goal, set the rules, and let disciplined experiments guide your creative, offer, and timing decisions. The payoff is fewer wasted sends, faster learning, and steady lifts in opens, clicks, and sales.

TargetBay Email & SMS brings this rigour to life with configurable test duration, sample size, and winning metrics, automated significance checks, and instant winner deployment for both campaigns and flows. If you also use TargetBay Reviews for social proof or TargetBay Rewards for loyalty incentives, you can test their placement and messaging to amplify trust and repeat purchases. The result is an email program that gets smarter with every send.

FAQs

What sample size and test duration should I use?

Aim to test 10 to 50% of your list per variant and target 95% confidence. For broadcasts, run 1 to 7 days based on engagement velocity. For flows, allow 1 to 30 days to collect enough entries. When using automated campaign tests, ensure your audience meets minimum recipient requirements for reliable significance; otherwise, consider 50/50 splits and aggregate learnings over multiple comparable sends.

Which winning metric should I choose?

Use open rate for subject line and preview text tests. Use click rate or click-to-open rate for creative and CTA changes. Use conversion rate or revenue per recipient for revenue-focused offers and pricing tests. Always align the metric with your core goal for that send.

Can small businesses run meaningful tests with smaller lists?

Yes. Use 50/50 splits, run tests longer, and aggregate results across similar sends to build confidence. Focus on high-impact variables like offer framing, CTAs, and hero images. When in doubt, run fewer, higher-quality tests and document what you learn for reuse later.