Quick Summary

◼️ A/B Testing Best Practices begin with goal alignment, selecting opens, clicks, click-to-open, or conversions based on campaign intent, protecting revenue and steering decisions clearly without conflating awareness and action.

◼️ Use disciplined test parameters by selecting 10–90% of your list, ensuring about 1,000 recipients minimum, and running 1 hour to 7 days, preventing noise, list fatigue, and unreliable results from insufficient sample sizes.

◼️ Demand 95% statistical confidence to avoid false positives, deploy winners decisively, and send control when no clear winner emerges, ensuring reliable learning while preserving performance for remaining recipients.

◼️ Prioritize high-impact variables first—subject lines, preheaders, offer framing, hero images, CTAs, layout, product order, and dedicated a/b testing email send times—changing one major element per test for clean attribution.

◼️ Automate testing operations with TargetBay Email & SMS to configure metrics, sample sizes, and durations, auto-deploy winners, and document insights, compounding gains across campaigns while reducing manual effort and analysis overhead.

Email performance shouldn’t be a guessing game. If your open and click rates drift without explanation, chances are your testing process is the culprit, not your audience. The cure is disciplined A/B Testing Email Best Practices designed for ecommerce realities, not generic marketing advice.

When you set the right goal, pick the correct sample size, and demand significance, every send becomes a confident step toward higher revenue. The right tooling removes manual drudgery, accelerates learning, and protects your list from waste. This guide gives ecommerce marketers a practical blueprint to test smarter and win more often—without slowing down launches.

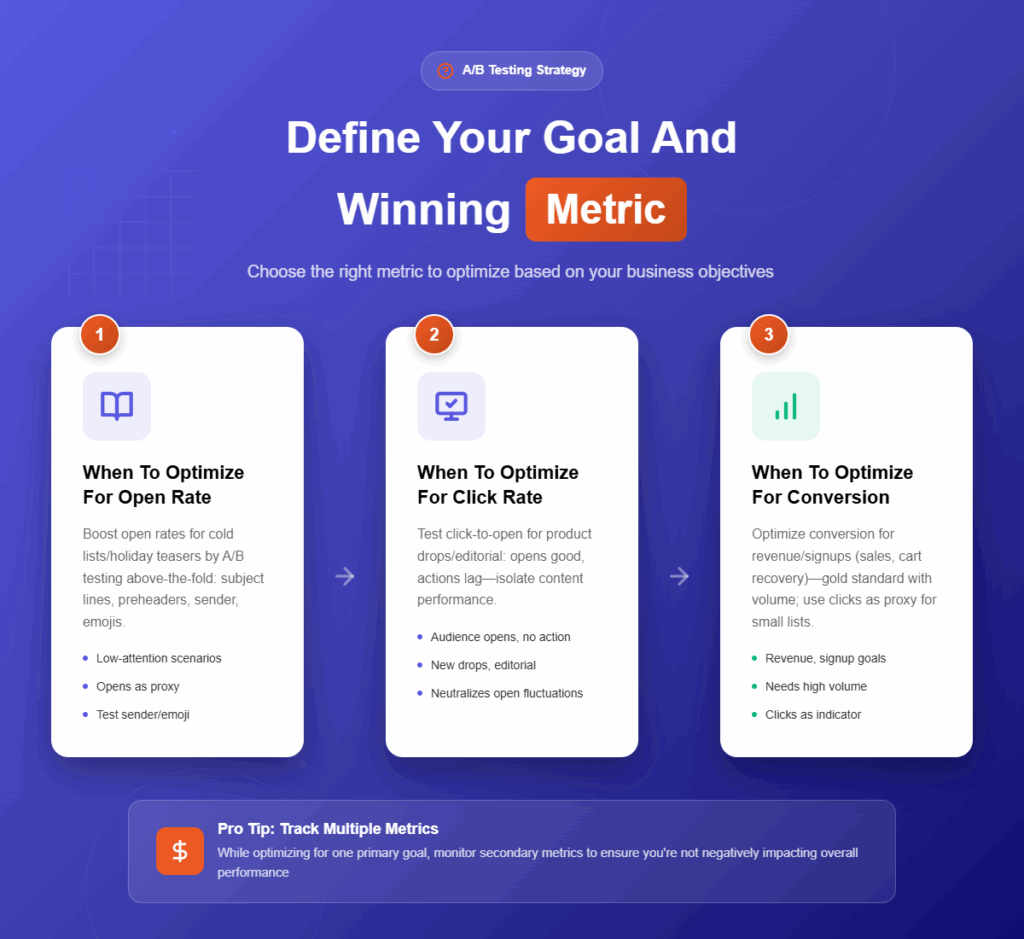

Define Your Goal And Winning Metric

Metrics are not interchangeable. Picking the wrong winning metric confuses outcomes and can kneecap revenue even if “the test won.” Set goals that tie directly to your campaign objective and buying journey stage.

When To Optimize For Open Rate

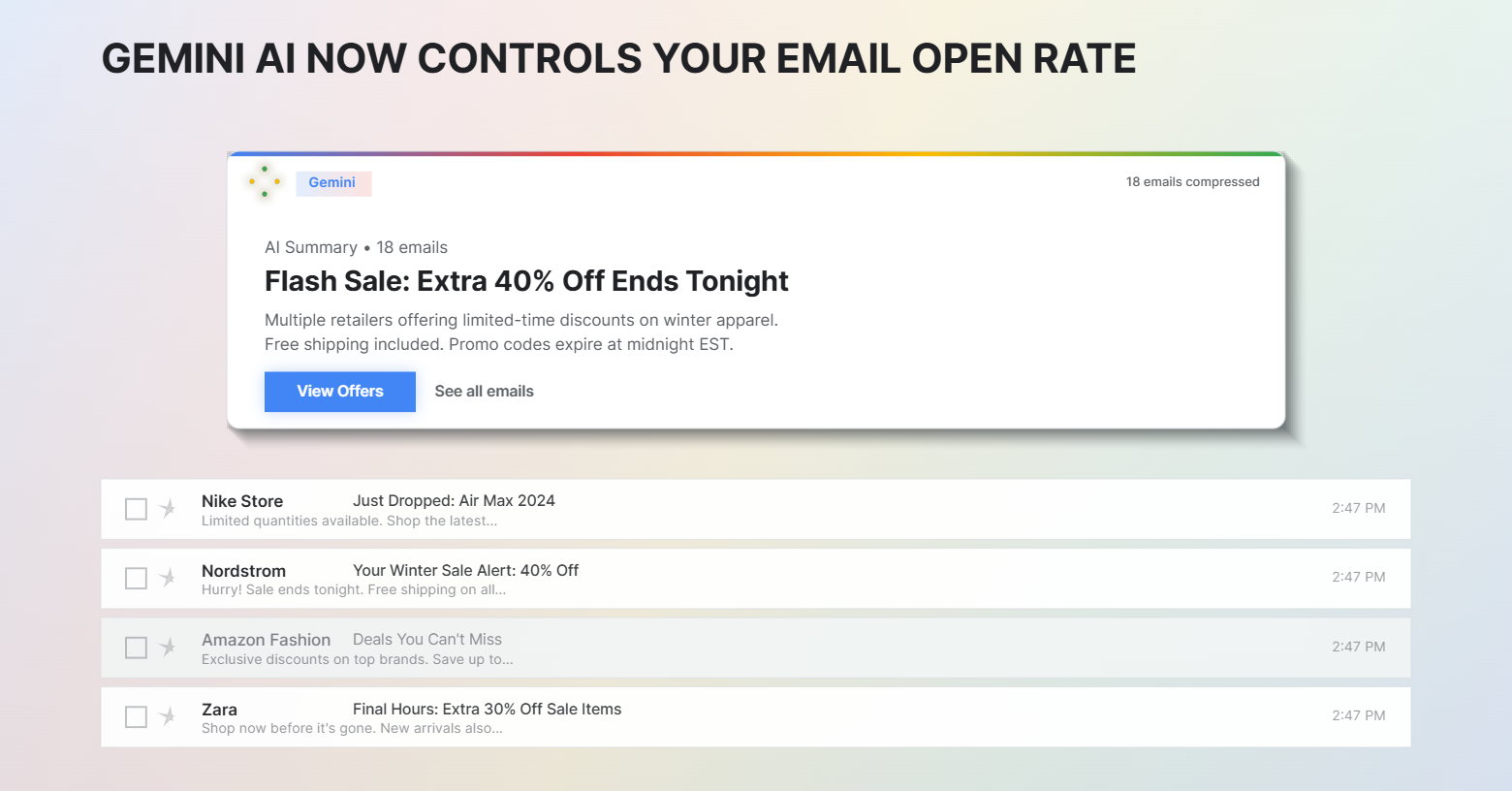

Optimize for open rate when the biggest blocker is attention, such as a cold list, seasonal re-engagement, or a holiday teaser. Your variable should live above the fold: subject line, preheader, sender name, or emoji usage. If open rates are consistently weak and you have strong click-to-open, treat open rate as your proxy for awareness.

When To Optimize For Click Rate And Click-To-Open

Optimize for click or click-to-open when your audience is opening but not acting. This is common for new product drops, editorial content, and collection highlights. Click-to-open isolates content performance by neutralizing fluctuations in open behavior, making it the better choice when subject lines vary little but in-email elements change.

When To Optimize For Conversion

Optimize for conversion when the email’s goal is revenue or signups, including sale announcements, cart recovery, and win-back offers. This is the gold standard but requires adequate volume. If your list is smaller or conversions are sparse, use click rate as a leading indicator, then validate on a larger send with conversion as the primary metric.

Set Test Parameters Correctly

Winning tests require the right audience and a clear stop condition. Skew these inputs and you risk “false winners” that burn your list and budget.

Choose a test sample size between 10% and 90% of your total recipients. Smaller brands with limited volume can lean toward higher test percentages to hit significance, while larger lists can test on 10%–30% and still reach confidence quickly. As a rule of thumb, ensure a minimum of about 1,000 recipients in your test to avoid unreliable variance.

Set duration between 1 hour and 7 days based on your send volume and behavior patterns. Fast-moving promos and flash sales merit a 1–4 hour test, so the remainder can benefit from the winner. Evergreen content or newsletters can run for 24–72 hours to catch delayed opens and clicks without stalling the campaign. Long-running tests beyond seven days invite noise from outside factors and list fatigue.

More A/B Testing Marketing Resources for You

Demand Statistical Significance

A lift isn’t real unless it’s statistically significant. Target a 95% confidence to protect yourself from misleading results, especially when differences are small. This standard reduces the risk of overreacting to random spikes and sending underperforming variants to the majority of your list.

If there is no clear winner by the deadline, send the control to the remaining recipients to avoid compounding risk. Record the outcome, tighten your variables, increase the sample next time, or change the winning metric based on your goal. Ties or insufficient data should be handled gracefully and documented so your testing backlog gets smarter, not longer.

What to Test First: High-Impact Variables

Not all tests are equal. Start with variables that influence visibility and action, then move to refinements once you’ve banked the biggest gains. Limit each test to one major variable to isolate cause and effect.

Subject lines and preheaders are your first lever when opens are sluggish. Test clarity versus curiosity, benefit-led versus urgency-led, and the use of brackets or emojis. Keep preheaders aligned to reinforce the hook, not repeat it.

Offer framing and urgency cues dictate perceived value. Test percentage off versus dollar off, tiered discounts versus a single bold offer, and urgency phrasing such as “Ends tonight” versus “Limited quantities.” Visual anchors like the hero image shape first impressions, so experiment with product-in-context versus clean studio shots or lifestyle versus close-up detail.

Calls-to-action and layout affect scannability and intent. Test CTA copy such as “Shop the Drop” versus “See Best Sellers,” adjust color contrast for accessibility, and evaluate above-the-fold CTA placement versus mid-email. Product ordering can prioritize best sellers for frictionless decision-making or new arrivals for discovery; test which aligns with your audience’s intent. Finally, treat send time as its own high-impact variable rather than mixing it with content changes in the same test.

Template And Content Best Practices

Consistency is your friend in controlled experiments. Keep controls stable and change only the element you are testing. That means the same template, the same list segmentation, and the same cadence window.

Adopt accessible, mobile-first templates that load fast, are usable with screen readers, and maintain contrast ratios that meet accessibility standards. Many ecommerce opens happen on mobile, so thumb-friendly buttons, concise copy, and compressed images are non-negotiable. This is core to a/b testing best practices for email content because poor accessibility can mask an otherwise strong variant.

Standardize tracking so clicks and conversions are meaningfully comparable. Use consistent UTM structures, ensure deep links point to the same page type, and align your attribution windows. If you test best practices for a/b testing email templates, make sure layout changes do not alter link density in ways that inflate or deflate clicks artificially. Data integrity is a prerequisite for trustworthy decisions.

Send-Time Testing For Ecommerce

Send time is one of the cleanest wins when you approach it methodically. Start broad by testing weekday versus weekend performance for your primary list, then refine into day-by-day patterns. Once you know the best day, run hour-based tests to identify morning, midday, or evening sweet spots.

Account for subscriber time zones rather than sending on your own schedule. What performs at 9 a.m. in New York may underdeliver in Los Angeles. If your platform supports local time sends, keep that setting consistent during tests to avoid cross-timezone noise.

Avoid list fatigue by not stacking multiple tests across consecutive days for the same audience. Send-time experiments can cannibalize engagement if you bombard subscribers. Treat a/b testing email send times as a recurring optimization cadence, not a daily obsession, and retest seasonally as purchasing patterns shift during holidays and peak sales events.

Automate And Operationalize Your Testing Program

Manual analysis slows launches and invites human error. Preselect the winning metric before you build variants, define sample size and duration upfront, and automate winner rollout to the remainder of your list. This keeps marketers moving fast without sacrificing rigor.

TargetBay Email & SMS is built for this workflow. You can configure test duration, sample size, and the winning metric, monitor performance automatically, and let the system deploy the winner to remaining recipients once significance is reached. Results are calculated with statistically sound methods and surfaced with clear reasoning, so your team focuses on strategy, not spreadsheets.

Institutionalize learning by logging hypotheses, outcomes, and next steps. Keep a living backlog that prioritizes tests with the largest potential business impact. The compound effect of well-documented tests is a smarter program that scales across campaigns, seasons, and segments.

Real Ecommerce Examples And Testable Hypotheses

The best a/b testing email marketing examples start with a simple, falsifiable hypothesis. Clear framing keeps tests focused and learnings transferable across campaigns. Use these email a/b testing ideas as a starting point and adapt them to your brand.

Test offer framing by comparing “25% off sitewide” against “$20 off orders over $80.” Hypothesize which framing better matches your average order value and margin realities. Validate both click rate and conversion to see beyond curiosity clicks.

Test social proof placement by adding a review quote near the hero or below the first product grid. If you use TargetBay Reviews, pull a top-rated review with a photo to increase credibility. Hypothesize that upstream social proof will improve click-to-open and downstream conversion by reinforcing trust.

Test free shipping thresholds with “Free shipping over $50” against “Free shipping today only.” One tests a structural incentive tied to order value; the other tests urgency. Align your metric to conversion, then segment follow-ups to capitalize on near-miss threshold behavior.

Test destination type by linking the hero CTA to a product detail page versus a curated collection. Hypothesize that collections drive higher click rates for discovery-oriented drops, while PDPs lift conversion for limited releases or restocks with high intent.

Test hero imagery with lifestyle imagery featuring the product in use against a clean studio hero. For brands with strong visual identity, hypothesize that lifestyle imagery boosts open-adjacent engagement, while studio shots improve clarity and conversion.

Test CTA copy with “Shop Now” against “See the Drop” or “Claim Your Offer.” Match the voice to the audience and state of awareness. Short, action-led CTAs often outperform clever ones, but test to be sure.

Conclusion

A rigorous testing program turns gut feelings into reliable revenue. Define the right metric, set controlled parameters, demand 95% confidence, and prioritize high-impact variables before fine-tuning templates and timing. The result is fewer false winners, less wasted volume, and a steady lift in opens, clicks, and conversion.

Tools matter when speed and scale are at stake. TargetBay Email & SMS lets ecommerce teams configure tests, calculate significance automatically, and send winners to the remainder—so every campaign gets smarter with less manual work. If your strategy includes social proof or loyalty nudges, TargetBay Reviews and TargetBay Rewards integrate seamlessly to amplify results without complicating your testing framework.

FAQs

What is A/B testing in email marketing?

A/B testing compares two or more email variants by sending them to a test segment, measuring a chosen metric such as opens, clicks, or conversions, and automatically sending the winning variant to the remaining recipients. It replaces guesswork with repeatable, data-backed improvements.

How big should my sample be and how long should I run tests?

Aim for a test sample between 10% and 90% of your list with at least about 1,000 recipients in the test group. Run the test until you can reach around 95% confidence, which typically takes 1 hour to 7 days depending on your send volume and campaign type. Fast promotions benefit from shorter windows to maximize remaining sends.

What if there’s no clear winner?

Default to the control for the remaining recipients to protect performance. Record the learning, consider increasing the sample size, clarify the variable change, or choose a different winning metric aligned to your goal for the next test.

What are a/b testing best practices for email content?

Keep one major variable per test, maintain a consistent template and list segment, and standardize link tracking. Focus first on subject lines, preheaders, offers, and CTAs before exploring creative refinements and personalization layers.

What are the best practices for a/b testing email templates?

Use accessible, mobile-first templates with high contrast, fast-loading images, and large tap targets. Change only one layout element at a time and ensure link density remains comparable so click data stays trustworthy.